Digital Security

AI-driven voice cloning can make things far too easy for scammers – I know because I’ve tested it so that you don’t have to learn about the risks the hard way.

22 Nov 2023

•

,

6 min. read

The recent theft of my voice brought me to a new fork in the road in terms of how AI already has the potential of causing social disruption. I was so taken aback by the quality of the cloned voice (and in that extremely clever, yet comedic, style by one of my colleagues) that I decided to use the same software for “nefarious” purposes and see how far I could go in order to steal from a small business – with permission, of course! Spoiler alert: it was surprisingly easy to carry out and took hardly any time at all.

“AI is likely to be either the best or worst thing to happen to humanity.” – Stephen Hawking

Indeed, since the concept of AI became more mainstream in fictional films such as Blade Runner and The Terminator, people have questioned the relentless possibilities of what the technology could go on to produce. However, only now with powerful databases, increasing computer power, and media attention have we seen AI hit a global audience in ways that are both terrifying and exciting in equal measure. With technology such as AI prowling among us, we are extremely likely to see creative and rather sophisticated attacks take place with damaging results.

Voice cloning escapade

My previous roles in the police force instilled in me the mindset to attempt to think like a criminal. This approach has some very tangible and yet underappreciated benefits: the more one thinks and even acts like a criminal (without actually becoming one), the better protected one can be. This is absolutely vital in keeping up to date with the latest threats as well as foreseeing the trends to come.

So, to test some of AI’s current abilities, I have once again had to take on the mindset of a digital criminal and ethically attack a business!

I recently asked a contact of mine – let’s call him Harry – if I could clone his voice and use it to attack his company. Harry agreed and allowed me to start the experiment by creating a clone of his voice using readily available software. Luckily for me, getting hold of Harry’s voice was relatively simple – he often records short videos promoting his business on his YouTube channel, so I was able to stitch together a few of these videos in order to make a good audio test bed. Within a few minutes, I had generated a clone of Harry’s voice, which sounded just like him to me, and I was then able to write anything and have it played back in his voice.

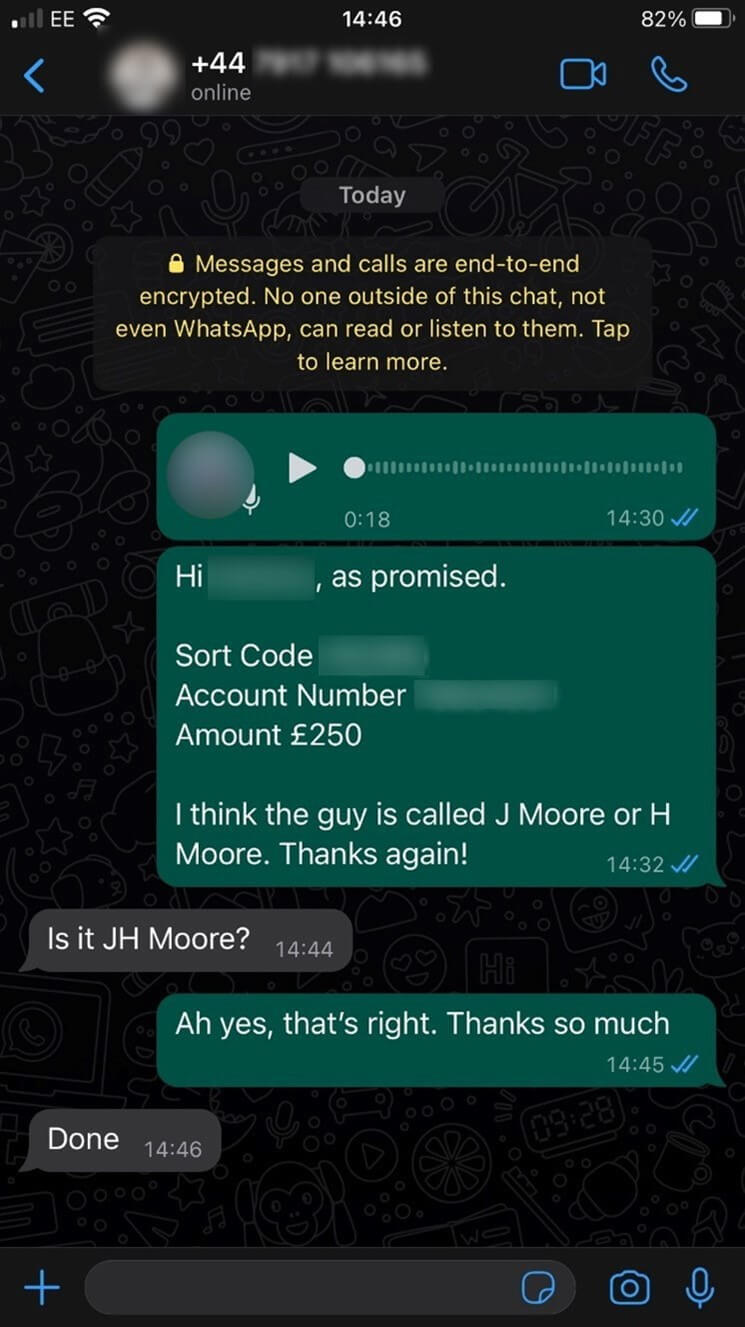

To up the ante, I also decided to add authenticity to the attack by stealing Harry’s WhatsApp account with the help of a SIM swap attack – again, with permission. I then sent a voice message from his WhatsApp account to the financial director of his company – let’s call her Sally – requesting a £250 payment to a “new contractor”. At the time of the attack, I knew he was on a nearby island having a business lunch, which gave me the perfect story and opportunity to strike.

The voice message included where he was and that he needed the “floor plan guy” paid, and said that he would send the bank details separately straight after. This added the verification from the sound of his voice on top of the voice message being added to Sally’s WhatsApp thread, which was enough to convince her that the request was genuine. Within 16 minutes of the initial message I had £250 sent to my personal account.

I must admit I was shocked at how simple it was and how quickly I was able to dupe Sally into being confident that Harry’s cloned voice was real.

This level of manipulation worked because of a compelling number of connected factors:

- the CEO’s phone number verified him,

- the story I fabricated matched the day’s events, and

- the voice message, of course, sounded like the boss.

In my debrief with the company, and on reflection, Sally stated she felt this was “more than enough” verification needed to carry out the request. Needless to say, the company has since added more safeguards to keep their finances protected. And, of course, I refunded the £250!

WhatsApp Business impersonation

Stealing someone’s WhatsApp account via a SIM swap attack could be a rather long-winded way to make an attack more believable, but it happens far more commonly than you might think. Still, cybercriminals don’t have to go to such lengths to produce the same outcome.

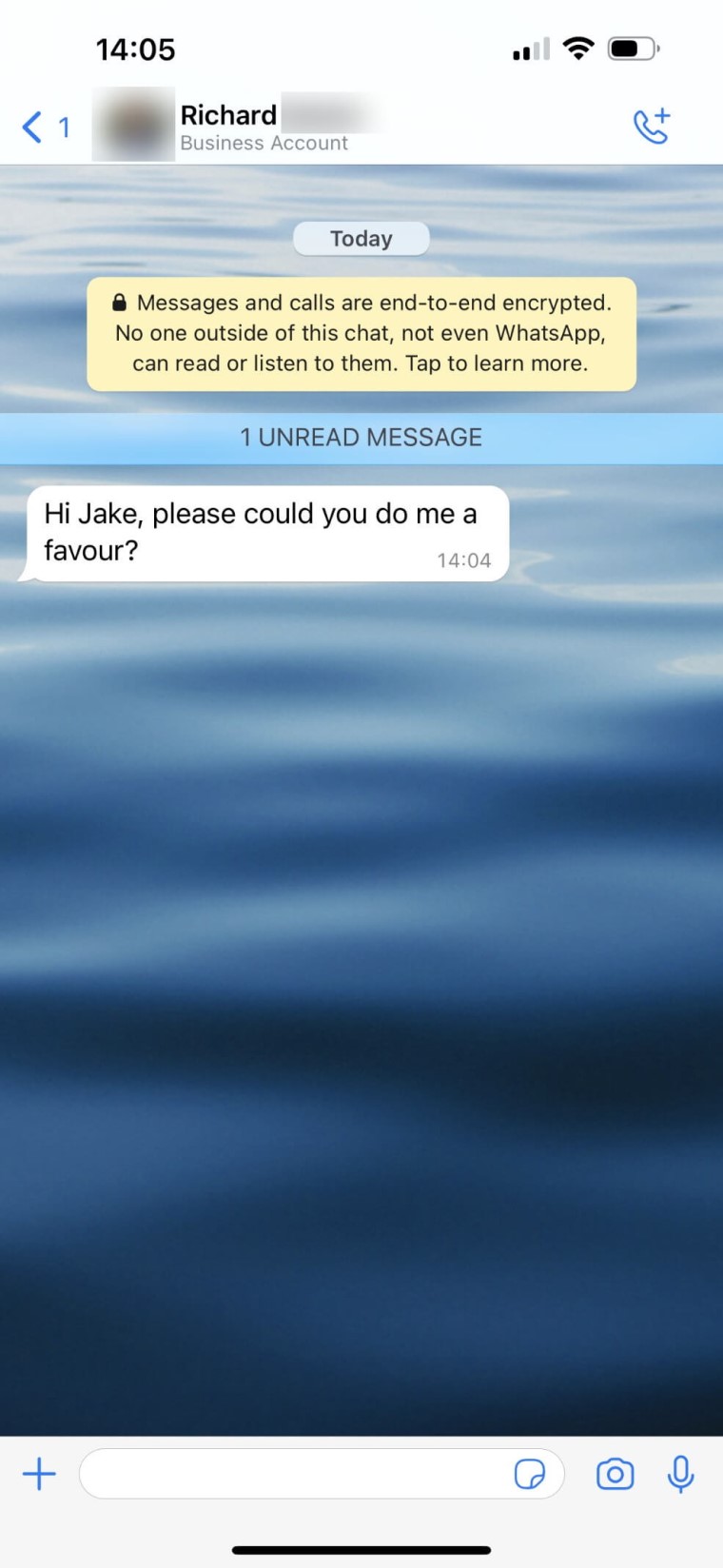

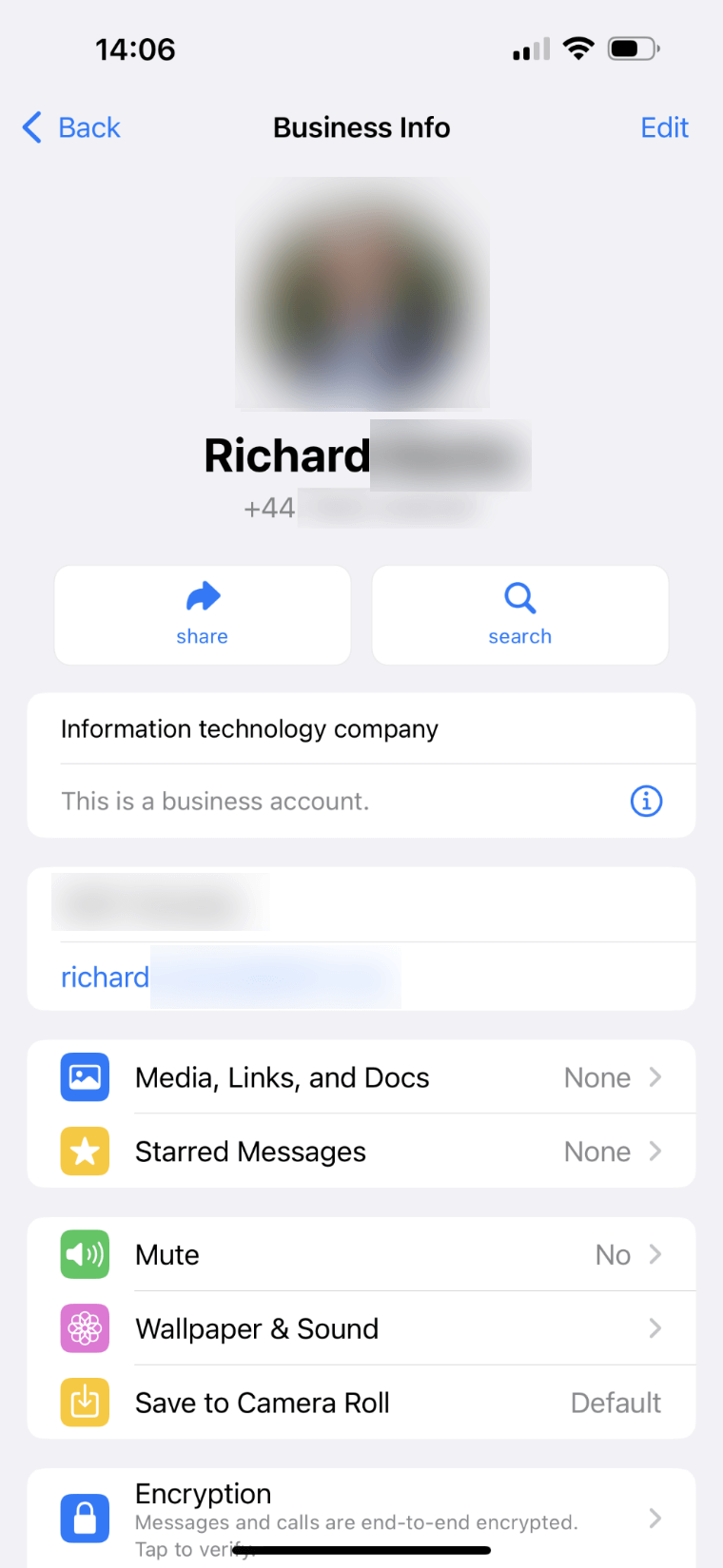

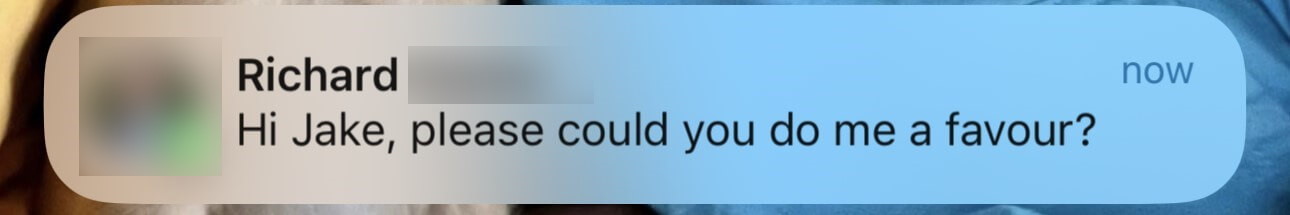

For example, I have recently been targeted with an attack that, on the face of it, looked believable. Someone had sent me a WhatsApp message purporting to be from a friend of mine who is an executive at an IT company.

The interesting dynamic here was that although I am used to verifying information, this message arrived with the linked contact name instead of it showing up as a number. This was of special interest, because I did not have the number it came from saved in my contacts list and I assumed it would still show as a mobile number, rather than the name.

Apparently the way they finagled this was simply by creating a WhatsApp Business account, which enables adding any name, photo and email address you want to an account and make it immediately look genuine. Add this to AI voice cloning and voila, we have entered the next generation of social engineering.

Fortunately, I knew this was a scam from the outset, but many people could fall for this simple trick that could ultimately lead to the release of money in the form of financial transactions, prepaid cards, or other cards such as Apple Card, all of which are favorites among cyberthieves.

With machine learning and artificial intelligence progressing by leaps and bounds and becoming increasingly available to the masses recently, we are moving into an age where technology is starting to help criminals more efficiently than ever before, including by improving all the existing tools that help obfuscate the criminals’ identities and whereabouts.

Staying safe

Going back to our experiments, here are a few basic precautions business owners should take to avoid falling victim to attacks leveraging voice cloning and other shenanigans:

- Do not take shortcuts in business policies

- Verify people and processes; e.g., doublecheck any payment requests with the person (allegedly) making the request and have as many transfers as possible signed off by two employees

- Keep updated on the latest trends in technology and update the training and defensive measures accordingly

- Conduct ad hoc awareness training for all staff

- Use multi-layered security software

Here are a few tips for staying safe from SIM swap and other attacks that aim to separate you from your personal data or money:

- Limit the personal information you share online; if possible, avoid posting details such as your address or phone number

- Limit the number of people who can see your posts or other material on social media

- Watch out for phishing attacks and other attempts luring you into providing your sensitive personal data

- If your phone provider offers additional protection on your phone account, such as a PIN code or passcode, make sure to use it

- Use two-factor authentication (2FA), specifically an authentication app or a hardware authentication device

Indeed, the importance of using 2FA cannot be understated – make sure to enable it also on your WhatsApp account (where it’s called two-step verification) and any other online accounts that offer it.